In the first part of my article, I talked about applications that have an important place in the history of artificial intelligence and introduced the subject of ChatGPT. In the second part of my article, I will continue to explain the working principle of ChatGPT, its areas of use and possible risks.

Operating Principle

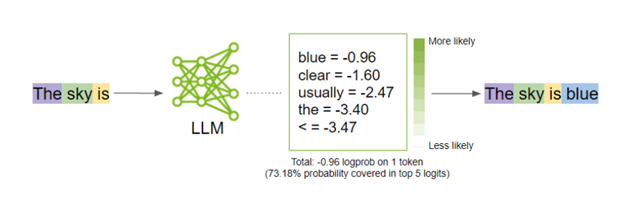

ChatGPT is everywhere in our daily lives! But what lies beneath this technology? Essentially, the algorithm works by predicting the next word. A language model takes in a sequence of words and returns the probability distribution for the next word. The length of the input sequence is limited by the size of the context window, which is specific to the language model (for example, for ChatGPT it's 4096 words).

As seen in the image above, when we type "The sky is" the algorithm predicts that the next word is most likely "blue" and continues the sentence. Since it predicts the next word, it responds to every question we ask as a continuation of the sentence.

Now let's take a technical look at this algorithm that predicts the next word. These models contain many parameters. What we call parameters are actually the weights of the neural network. For example, there are 70 billion parameters in the open source llama-2–70b model published by Meta. Considering that each parameter is 2 bytes, the size of this parameter file is 140 gigabytes. For these parameters, at least 6,000 GPUs (graphics processing units) are required to compress 10 terabytes of data collected over the internet, which corresponds to a time spent of 12 days and money spent 2 million dollars.

OpenAI founder Sam Altman mentioned in an interview that the training process of GPT-4 cost over 100 million dollars in GPUs! It's claimed that GPT-4 has 1 trillion parameters, but these are currently only speculations as OpenAI hasn't disclosed the full training figures for GPT-4.

The accuracy of ChatGPT models is a crucial point. Because if these models are not guided with the correct commands (prompts), the algorithm gives incorrect information called hallucinations. Prompts are used to determine what type of text or data the model will be trained on and what type of responses it will generate. The importance of prompt engineering stems from its ability to enable AI models to produce desired results and reduce or eliminate undesired outcomes. Properly designed prompts can make the model's outputs more predictable, minimizing undesired behaviors and helping to achieve more suitable and consistent results for a specific task or domain. Therefore, providing as much detail as possible when asking questions to ChatGPT, if possible even providing examples, enhances the accuracy of the results obtained and reduces the likelihood of the model providing unreal (fantasy) responses.

Application and Usage Examples

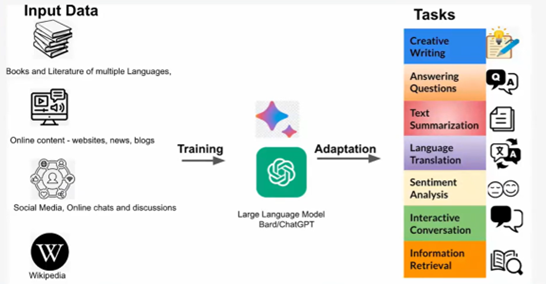

There are many applications today that utilize this technology. The most well-known ones include Google BARD, OpenAI ChatGPT, Google Gemini, and Meta Grok, all of which are applications produced by different companies but serve the same purpose. We can use these applications and many others in many areas of our lives. We can receive personal support such as creating emails and cover letters, creating lists, generating images and describing images in detail, coding, summarizing, writing poems and song lyrics.

Companies can integrate ChatGPT into their customer service and support systems to answer users' questions or solve their problems. With OpenAI's collaboration with Microsoft, Microsoft also uses OpenAI technology as its algorithm. The Copilot 365 application, recently announced by Microsoft, which will integrate artificial intelligence into all Office 365 tools, seems to make work life easier in many aspects.

While talking about how companies benefit from artificial intelligence, I can't skip mentioning our artificial intelligence applications at Borusan Cat, which are among the technologies that support our proactive business model 😊 With our company’s purpose statement "We Create Solutions for a Better World" it's of great importance for us to use technology to be beneficial for this world. In line with this, we utilize artificial intelligence both in our business processes and in the solutions we offer to our customers.

With our artificial intelligence application, Müneccim, which predicts faults in machinery before they occur, we are digitizing traditional processes and playing a pioneering role in the transformation of our industry. In this application, by processing data from the machines with algorithms, we can accurately predict which machines need service checks and have the potential for breakdown. We offer our "Master's Ear" artificial intelligence application, which can detect faults by listening to the sound of machines for as little as 30 seconds, through our super application, Boom360, to our customers. Similarly, with our indispensable product recommendation engine, we predict other parts customers can buy alongside when purchasing parts through Boom360 and provide them with product recommendations. Our artificial intelligence algorithm, RFMS, predicts customers likely to purchase machinery and supports our sales consultant friends.

I can't help but mention how proud it is to have our use of artificial intelligence technologies in our operations taken as a case study at Harvard Business School!

Risks

Technologies can be either beneficial or harmful depending on how they are used. These harms can raise concerns about the privacy of corporate or personal data. You need to make sure that the application you are using will not store or use the data you have written. As I mentioned before, since the models respond to your commands, a question that is not asked clearly and in detail may result in incorrect information. The numbers of frauds are increasing due to technologies such as voice and image cloning using Generative AI. It is becoming increasingly difficult for us to understand what is produced by artificial intelligence in some areas.

Maybe I had ChatGPT write this entire article you are reading, who knows 😊

Thank you very much for reading and taking the time!

Sources

https://www.coursera.org/learn/generative-ai-for-everyone/lecture/u2EQ9/automation-potential-across-sectors

https://economicgraph.linkedin.com/content/dam/me/economicgraph/en-us/PDF/future-of-work-report-ai-november-2023.pdf

https://content.dataiku.com/llm-starter-kit

https://memsql.wistia.com/medias/tck1f3clzj?campaignid=701UJ000000pYIzYAM

https://barissglc.medium.com/llm-large-language-models-nedir-b%C3%BCy%C3%BCk-dil-modellerine-k%C4%B1sa-bir-giri%C5%9F-9872ce8523af

https://memsql.wistia.com/medias/xqtu6j46y0

https://www.youtube.com/watch?v=UU1WVnMk4E8

https://www.youtube.com/watch?v=zjkBMFhNj_g&t=2s

https://on24static.akamaized.net/event/43/71/52/4/rt/1/documents/resourceList1699896189333/llmdevdaysession2final1699896189333.pdf

https://on24static.akamaized.net/event/43/71/54/9/rt/1/documents/resourceList1699985599696/llmdevdaysession41699985599696.pdf

https://on24static.akamaized.net/event/43/71/30/0/rt/1/documents/resourceList1700153986852/llmdevdaysession1stakeholderreviewdt202311151700153986852.pdf

https://nvdam.widen.net/s/rvsgdxpfkz/dli-generative-ai-llm-learning-path-2740963

https://www.deeplearning.ai/short-courses/chatgpt-prompt-engineering-for-developers/

https://learn.microsoft.com/tr-tr/microsoft-365-copilot/microsoft-365-copilot-overview

https://www.youtube.com/watch?v=EEhgiHnnHXg

.jpg)

He has been working on different topics in the field of artificial intelligence and data science for 14 years. At Borusan Cat, where he is currently the Lead Data Scientist, he has been working on Artificial Intelligence since 2019. As a motor sports enthusiast Yasin enjoys reading about psychoanalysis, dealing with data and experiencing new technologies.